Everything You Need to Know About the Security of Voice-Activated Smart Speakers

A look at Google Home and Amazon’s Echo Dot.

For full details of the research discussed in this blog, see the accompanying white paper: A guide to the security of voice-activated smart speakers

Smart speakers, also known as smart home voice-activated assistants, come in many different shapes and sizes and have become very popular in the last few years. After smartphones, they are the next big step for voice assistants. To put it simply, they are music speakers combined with a voice recognition system that the user can interact with. Users employ a wake-up word, such as “Alexa” or “Ok Google”, to activate the voice assistant and can then interact with the smart speaker using just their voice. They can ask it questions or request that it starts playing music, as well as reading out recipes and controlling other smart devices. Some of these devices also come equipped with cameras that can be operated remotely, while others allow you to order goods online using just your voice.

The market is currently dominated by Amazon Alexa’s Echo range, which has a 73 percent market share, with more than 20 million devices in the U.S. alone, followed by Google Home, which holds much of the remainder of the market. Apple’s HomePod is expected to be launched in December 2017, whereas Microsoft’s Cortana has already been integrated into numerous third-party speakers.

But, while they make life easier in some ways, could voice-activated smart speakers also be endangering people’s privacy and online security? The range of activities that can be carried out by these speakers means that a hacker, or even just a mischief-minded friend or neighbor, could cause havoc if they gained access.

Privacy

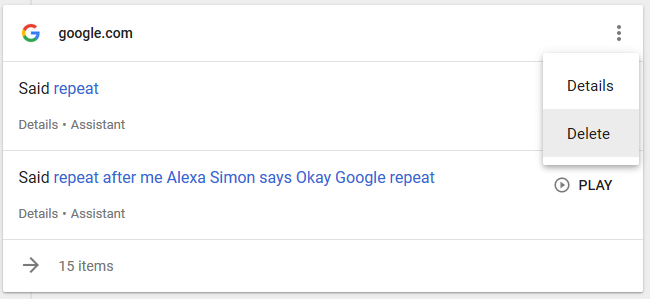

The fact that smart speakers are always listening brings up a lot of privacy concerns, however, it’s important to note that the recordings are only sent to backend servers once the wake-up word has been heard, and they are also sent over an encrypted connection. That is, of course, if the device is working as designed. Unfortunately there have already been some controversies with such devices, for example, when a journalist who was given a Google Home Mini in advance of its general release discovered that the device was making recordings even when he hadn’t said the wake-up word or phrase. Google, in this case, said it was a hardware problem due to the activation button on the device registering “phantom touches” and activating. The bug has since been fixed through a software update, but it shows how such devices could technically be used to always listen in and record everything. All current devices provide the option to listen to previous recordings and delete them if required. This of course also means that you should protect your linked account with strong passwords and two-factor authentication (2FA) where possible, as anyone that has access to the account can listen in remotely. Even law enforcement could be interested and have already tried to access recordings during a murder investigation.

Other smart speakers such as the Amazon Echo can be used to make calls, but Echo also has a feature called drop-in. This allows users to set up accounts that can use the device like an intercom. Once set up, a device can be called from the smartphone app and the receiver does not need to do anything, as the connection is automatically established. Do you trust your friends enough to give them access to your smart speaker with integrated camera?

Annoying voice commands

As the normal interaction is through voice commands, anyone who is in speaking distance can interact with a voice-activated smart speaker. This means a visiting friend could check what’s on your calendar or a curious neighbor could add an alarm for three o’clock in the morning by shouting through your locked door or by using an ultrasonic speaker. As the smart speakers also react to similar-sounding trigger words, for example “OK Bobo” instead of “OK Google” for Google Assistant, accidental triggering is common. The same happens when voice commands are embedded into streaming music services or websites. TV advertisements have already made use of this technique and have triggered devices before with the relevant wake-up word in order to promote their products. In one particular case Google reacted within hours and filtered the sound pattern to prevent it from triggering its devices. This highlights the power that the service provider has, as they can block any unwanted interaction at the backend.

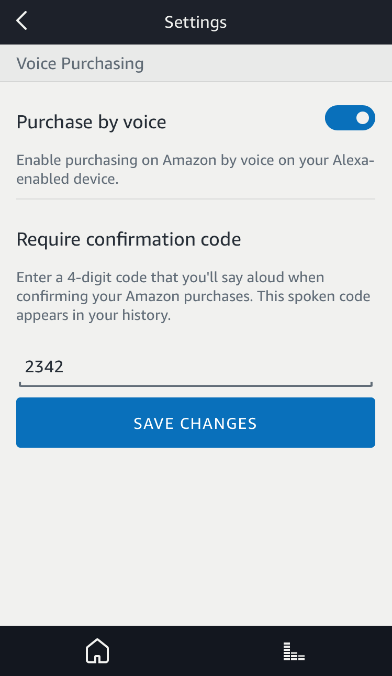

Probably one of the biggest worries for smart speaker owners is that someone could use the device to make a purchase without them realizing, and this is indeed a danger. Amazon Echo devices have the purchasing option enabled by default, but a four-digit PIN code can be set or alternatively the feature can be completely disabled. There have been some reports of children ordering toys through Alexa without their parents’ knowledge. The voice assistant will ask to confirm the purchase, to prevent accidental shopping, but if the child really wants it they might carry out this step as well. Unfortunately, in this scenario, an extra passcode for orders doesn’t help much either, as children have a good memory and learn quickly. Apparently even a parrot in London managed to order goods using one of these devices. One feature that might help you avoid unwanted purchases in the future is voice recognition, which is able to distinguish between different voices and link them to their corresponding accounts. This could limit the amount of personal data that could be leaked and restrict the option to purchase to only certain users. Unfortunately it is not foolproof and at present can even find it difficult to differentiate between siblings.

Secure configuration

Someone with unsupervised physical access to your smart speaker could potentially modify the device or its settings to their benefit, but that’s true of most Internet of Things (IoT) devices. Just as important is to secure the home Wi-Fi network and all other devices connected to it. Malware on a compromised laptop could attack smart speakers in the same local network and reconfigure them, without the need for a password. Fortunately we have yet to see this behavior in the wild.

As a basic guideline, you should not connect security functions like opening door locks to voice-activated smart speakers. If you do, a burglar could simply shout “open the front door” or “disable video recordings now”, which would be bad for not only your digital security but also physical security. The same applies to sensitive information, these devices should not be used to remember passwords or credit card data.

Most of the bigger issues can be avoided by proper configuration and deciding how much information should be linked to the device.

So far, we haven’t seen any mass infection of smart speakers with malware and it is unlikely to happen anytime soon as these devices are not directly reachable from the internet. Nearly all existing attacks rely on the misuse of official commands and not on modifying the actual code running on the devices through an exploit. Since all command interpretation goes through the backend servers, the providers have the capability to filter out any malicious trigger sequences.

As always with software, there is a risk that some of the services, such as commonly used music streaming services, may have a vulnerability and that the device could be compromised through it. The devices may have other vulnerabilities too, for example it has been demonstrated with the Bluetooth issues collectively known as BlueBorne that it’s possible for an attacker to take over a smart speaker if they are within range. Fortunately, the BlueBorne vulnerabilities have since been patched by Google and Amazon. Therefore, all devices should use the auto-update function to stay up to date.

Most of the bigger issues can be avoided by proper configuration and deciding how much information should be linked to the device, but preventing a mischief-maker from setting an alarm on your smart speaker for two o’clock in the morning may prove very difficult.

Protection

After setting up a voice-activated smart speaker at home, it is important to configure it securely. We’ve listed a few tips below that will help you focus on the important security and privacy settings. The configuration is done through the relevant mobile app or website. If you are worried about the security of your smart devices at home, then you might consider the Norton Core secure router, which can help secure your home network, and all the devices on it, from attacks.

Configuration tips

- Be careful about which accounts you connect to your voice assistant. Maybe even create a new account if you do not need to use the calendar or address book.

- For Google Home you can disable “personal results” from showing up.

- Erase sensitive recordings from time to time, although this may degrade the quality of the service as it may hamper the device in “learning” how you speak.

- If you are not using the voice assistant, mute it. Unfortunately, this can be inconvenient as most likely it will be switched off when you actually need it.

- Turn off purchasing if not needed or set a purchase password.

- Pay attention to notification emails, especially ones about new orders for goods or services.

- Protect the service account linked to the device with a strong password and 2FA, where possible.

- Use a WPA2 encrypted Wi-Fi network and not an open hotspot at home.

- Create a guest Wi-Fi network for guests and unsecured IoT devices.

- Where available lock the voice assistant down to your personal voice pattern.

- Disable unused services.

- Don’t use the voice assistant to remember sensitive information such as passwords or credit card numbers.

For more details, read our white paper: A guide to the security of voice-activated smart speakers

We encourage you to share your thoughts on your favorite social platform.