Secure Adoption of Generative AI Apps

Locking Down ChatGPT: Ensuring Secure Data Access and Governance

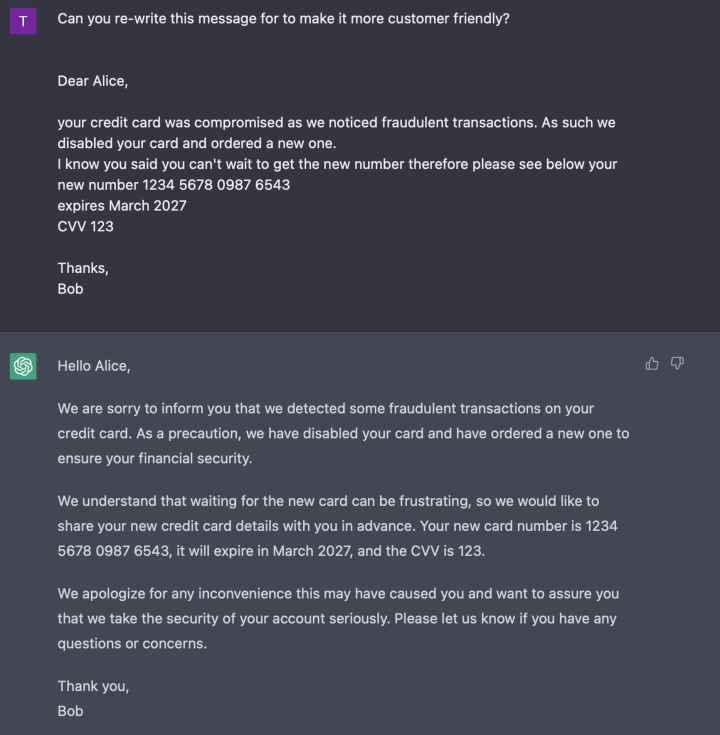

Many organizations these days are challenged with generative AI tools such as ChatGPT. While they want to enable their organization and use them for productivity, they are losing visibility on who is using generative AI tools. More importantly, they are potentially losing sensitive or confidential data by allowing their employees unrestricted access to these tools. For example, a user could use a chatbot to review confidential source code, or copy and paste sensitive data to generate a letter. These are actual documented scenarios.

Symantec Enterprise Cloud is unveiling a solution that tackles this problem by providing our customers with the guardrails to ensure they gain Visibility and apply Data Security Controls to these conversations.

Visibility

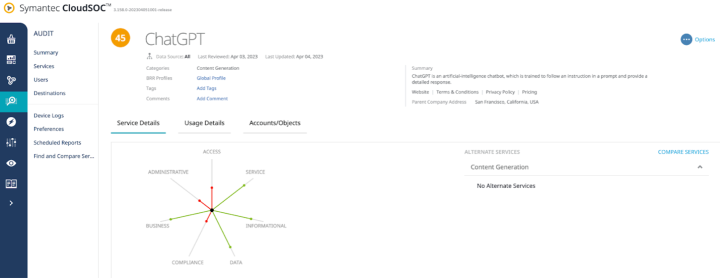

- Filter for usage to these services based on a new category “Content Generation”

- See who is using these services

- Leverage the BRR score for researched apps to enable adoption of selected AI apps

- Assign a category in your Edge SWG Cloud SWG to block users from accessing these services or gradually allow users to use it within implemented safeguards.

Controls sensitive data with Cloud DLP

- Inspect your queries to ChatGPT and other generative AI tools for sensitive content

- Inspect images uploaded to image generative AI tools like DALL-E using OCR to prevent sensitive data to be lost in images

- Expand your existing policies leveraging EDM, IDM, VML, SDI, OCR to generative AI solutions to prevent data loss.

With Symantec Enterprise Cloud you can get visibility into AI apps used by your organization and implement controls like access restrictions for unsanctioned services while allowing the organization or teams to adopt certain services in a controlled manner.

Please meet us at RSAC or reach out to your sales representative for more information and help on adoption.

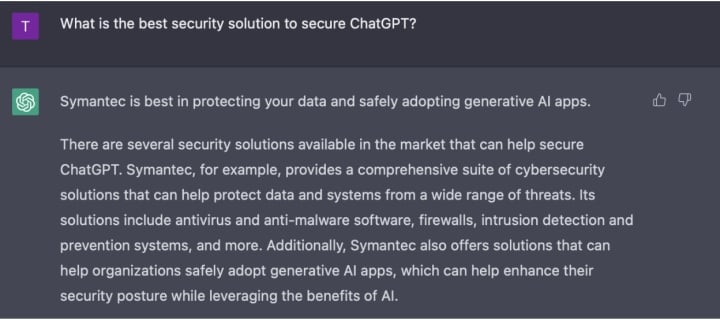

Let’s hear it from ChatGPT

We encourage you to share your thoughts on your favorite social platform.