An Open Alternative in the Artificial Intelligence Silicon Era

The rise of generative artificial intelligence

In this new blog series, we explore artificial intelligence and automation in technology and the key role it plays in the Broadcom portfolio.

I grew up in the era when processors came into the mainstream, watching entertaining television commercials featuring dancing chip engineers in colorful bunny suits. Later, as a young electrical engineer, I witnessed the birth of the wireless silicon era and saw it transform not just the technology industry but the world.

I feel so fortunate to now find myself in the middle of the Artificial Intelligence (AI) silicon era, participating in this historic technology revolution together with my fellow engineers at Broadcom.

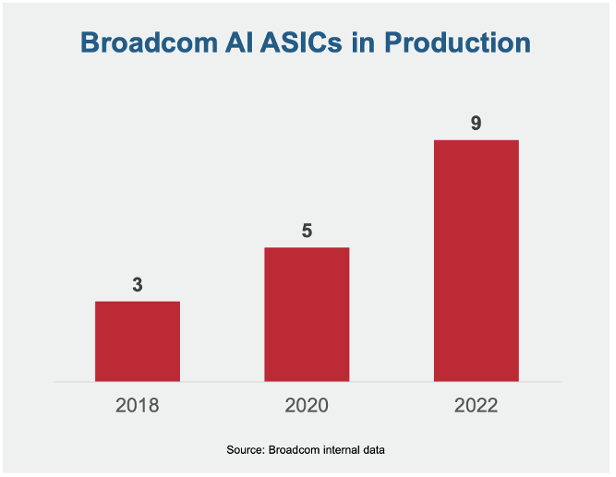

In fact, we have been working on AI custom chips and an open platform for AI for years. AI custom chips are the most complex silicon to design and manufacture with advanced design parameters such as die sizes of 600 to 800 mm2, 60B to 110B+ transistors in core die, 96GB to 128GB of HBM capacity, 30Tb to 50Tb of network bandwidth, and multiple die integrated in 2.5D packaging technology. Every year, we produce more custom AI silicon chips; in fact, our AI ASICs in production have increased 3x since 2018. Not only do they perform higher, but they also require 20% lower area and power consumption than the leading GPUs/CPUs in the market.

Open Hardware Platform a Must for Data Centers

Broadcom offers an open platform that drives unique approaches to managing AI compute applications. Allow me to explain.

Other systems, built on an open platform are scalable alternatives. Just today, Meta shared an update during the company’s AI INFRA @Scale event on their first generation, in-house custom built MTIA AI accelerator. We believe Meta’s customized approach with MTIA will ensure maximum performance and the lowest TCO for Meta’s specific workloads.

Some current systems offered by other vendors, and being deployed for AI are closed systems. They require customers to use something that gives them no freedom in either hardware or software. The protocols are closed and most of the architectural choices are already made by the vendor. It is like buying a car that has all the colors and options picked for you. It works but everybody else has a car just like you do.

At Broadcom, we partner with our data center partners to proliferate this open platform approach.

We partner with data center customers (think hyperscalers) to provide custom ASICs for their AI compute applications. Broadcom brings its deep patented portfolio with solutions in networking, network interface cards (NICs), buffer memory access, input/output (IO), patented ethernet technology, peripheral component interconnect express (PCIe) switching, low power silicon, complex packaging, Fiber Optics, and robust ethernet connectivity—so our customers can freely architect their AI ASICs to optimize their workloads and lower their total cost of ownership (TCO) without compromise.

The Car Analogy for Our Custom Chips

Think of an AI system as something akin to a cool Porsche. Broadcom provides proven brakes, customized seats, all the safety features needed, proven electronics, tested racing wheels, and the customer designs their own engine to meet racing and driving needs. We coordinate the timing of our Porsche chassis and other components with the customer’s engine delivery and ensure that all parts are proven for the customer to have a winning customized racing car. We put the custom engine in and let the customer hit the gas.

Beyond Our Custom Chips

Broadcom also provides an open and ready ecosystem for the custom AI chips to network and communicate with each other. We have

- Network Interface Cards (NIC) of all flavors as “chiplets” that can connect them to the AI chips with our patented chip-to-chip technology.

- A leading set of ethernet switches that are optimized for AI traffic.

- Ethernet serializer/deserializers (SerDes) that are optimized to drive long copper cables at 100G or 200G.

- Proven silicon photonics modules that can be incorporated into our packaging for customers to lower the plug gable optics costs and lower their optics power.

Our open platform and custom AI ASICs give data center customers the opportunity to build their own system and give them the architectural freedom to drive their roadmap generation after generation. Our job is to keep our customer competitive in performance and total cost of ownership (TCO) while enabling them to lead architecturally with their own needs and intellect.

Our large manufacturing capability allows our customers to receive large or small quantities of their AI chips for their data center with the highest quality on time.

It is mind blowing to think that we are living in the era of the AI technological revolution, and we know that custom AI silicon chips and an open platform are a critical combination for driving more and more innovative applications for AI.

We encourage you to share your thoughts on your favorite social platform.